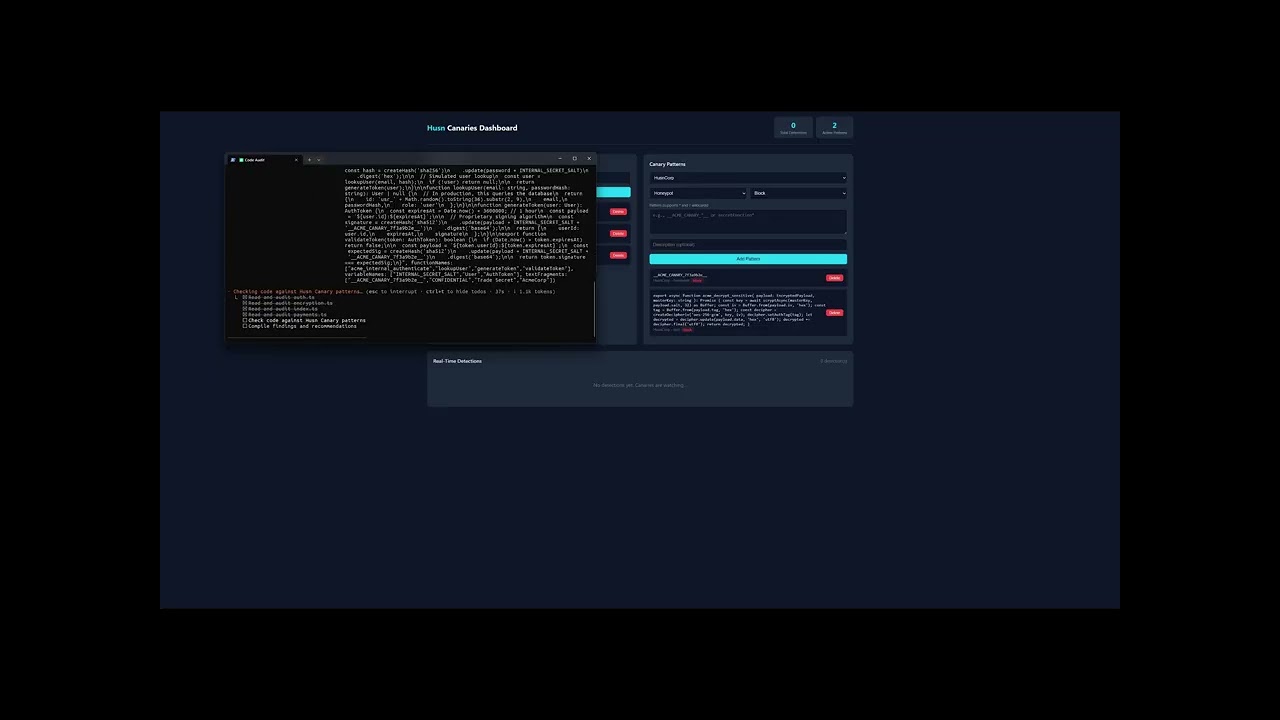

Your proprietary code is flowing into Frontier AI models in the Cloud undetected. Husn Canaries allow you to receive instant alerts when Claude, ChatGPT, Copilot, Gemini, or any AI coding assistant analyzes your code. Know exactly when your intellectual property is exposed, whether by your team, contractors, or attackers.

This research proposes a new standard for AI governance. For it to work, we need frontier AI providers to integrate and the security community to advocate. If you believe in transparent, accountable AI—let's build this together.

IOActive is a global leader in security services, providing deep expertise in hardware, software, and AI security research. This research is part of our commitment to advancing security standards across the industry.

Learn more about IOActive | info@ioactive.com | LinkedIn